Bash History Forensics

I recently had the privilege to do a decent amount of forensics on a bunch of Linux systems. I've been a Linux hobbyist since Ubuntu 8.04 but I would never have described myself as a power user. While I still have a lot to learn, here are a couple hints that helped me get through many, many lines of recorded user command history.

Before we get started, let me just point out where the .bash_history files are located:

You can find the "root" user's file at "/root/.bash_history" and the history file a <user> at "/home/<user>/.bash_history". Be sure to check for all users' .bash_history files.

Techniques

Things to check first

Before you jump right in and start trying to figure out what in the world a user was doing on a system, you should be aware of any pre-configured aliases that the user could be using as a shortcut.

The article by "crismblog" at http://community.linuxmint.com/tutorial/view/891 describes how you (or a bad guy) can create a bash alias with a simple command such as alias install="sudo apt-get install", and adding a line like that to a configuration file can save that alias for future use. Be sure to check the following common configuration files for aliases and keep in mind their may be other files in use:

- /root/ or /home/<user>/

- .bashrc

- .profile

- .alias

- .alias-csh

- Hint: Keep an eye out for other installed shells and their configs such as "C Shell". They usually also keep history files that can be analyzed in a similar fashion to bash history.

- /etc/

- bash.bashrc

- profile

De-duplication and Sorting

After I get a read-only image mounted and ready to go and check for aliases, I usually extract/copy the bash history files for each user onto my analysis drive and save a second copy as with a CSV file extension. This allows me to double click it and open it right into excel for de-duplication and sorting. The advantage of de-duplication and sorting allows me to quickly look through all the recorded commands while not wasting time on duplicate entries. It is also convenient to see how many unique IPs were used with SSH, which files were edited with vim/nano/etc., and so on.

To remove duplicates and sort with Excel, highlight everything with a Ctrl+A, click on "Data" up at the top, and click on "Remove Duplicates". You can then click one of the sort buttons to group similar commands.

I usually end up color coding commands I think are good/bad/suspicious by changing the cell's fill color. This allows me to skip some commands and keep track of what I have to come back to by using Excel's "Filter by color" feature. With this technique, I can easily see commands that weren't analyzed yet, all bad commands, or even color coded typos pretty quickly.

But wait, what about the context?

The de-duplication and sorting provides a quick, high level overview of the commands recorded, but you lose the important context that is timing. For most bash history files, the only explicit time context you can get is from the .bash_history file's last modified timestamp and the order the command appeared in. You may be able to derive some other timing information based on the coordination of logs or file metadata with related commands.

By looking at bash history in it's raw existence (in Excel or a good text editor), you can see the order in which files were executed. By looking at sorted history, we may have seen the user used a compression command such as rar, zip, or tar and they used an scp command with a remote IP address. Looking at the raw history may tell you these commands were executed adjacent to each other which can put some puzzle pieces together.

Timestamps? Sounds good!

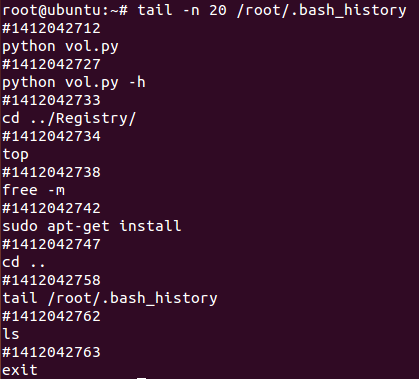

If you get lucky, the bash history may be recording the Epoch timestamp of every command. I've only come across this twice in the field, but it was pretty great. The post over at http://larsmichelsen.com/open-source/bash-timestamp-in-bash-history/ explains how bash history timestamp recording can be enabled with a command such as export HISTTIMEFORMAT="%F %T " >> /etc/bash.bashrc and how timestamps can be displayed with the history command.

If you are lucky enough to have timestamp recording enabled, you should note that it will mess up your de-duplication and sorting efforts as it will add a lot of entries that look like #13342793 that won't provide much information when they are separated from their respective commands.

You can convert these timestamps with http://www.epochconverter.com/ or you can even use their suggested Excel formula =(A1 / 86400) + 25569 if you are an Excel ninja. Please note that these timestamps are recorded in UTC/GMT.

Tool Time

To provide some more context around executed commands, it is useful to know the working directory at the time of execution. The best way to do this is to follow the cd commands executed before the suspect command. For a busy busy user, this can be difficult to track down. To combat this problem, I created a script that you can git from https://github.com/davidpany/BashHistoryCDFinder. The screenshot below shows how easy it is to see a specified number of cd commands executed before one or more commands that start with a string of your input:

In this example, you can see exactly what directory I executed this ssh command from by following my directory changing trail. The more cd commands you choose to look at, the more context you may get.

What's the catch?

So yes, bash history logs are awesome and can provide tons of evidence, but there are a couple things to look out for. First, it is possible to configure bash to discard commands by linking the .bash_history file to /dev/null or different file for hiding. If the history files look sparse, also check the configuration setting listed above for any mention of /dev/null or a configuration of the HISTFILE variable to a non standard file.

Also, bash history is usually only appended to the history files on a clean exit from terminal. A clean exit can be a logout, an exit command, a reboot, or even a GUI terminal close. Here are a few reasons bash history may not be written to disk:

- loss of system power

- OS/terminal crash

- SSH connection ended without a proper logoff

- application or terminal forced to stop with Ctrl+Z

If the user has the proper permissions, they can also just delete the .bash_history file or overwrite it with another file, although you may see the command they used to do that.

It is also worth noting that commands may be saved to the history file out of chronological order. This may be caused by multiple sessions open on system concurrently that are closed out of order. While there is no good way to detect this without timestamp logging, it is important to keep in mind.

Hopefully this post will help give you a starting point for dealing with huge .bash_history files and maybe some good ideas you might not have considered. Please share your tips with me on Twitter @DavidPany or in the comments here.

Thanks for reading!

-Dave